Struct Node *left,*right /* Left and right leafs */īut i not at all understand how can i get the symbol and from ".bin" file (which consists of only "0" and "1") ? Unsigned char symbol /* the symbol or alphabets */ Whereas frequency is the repetition of the number of alphabets (eg, abbacdd here freq of a=2, b=2 ,c=1, d=2).Īnd my structure must be like this: struct Node

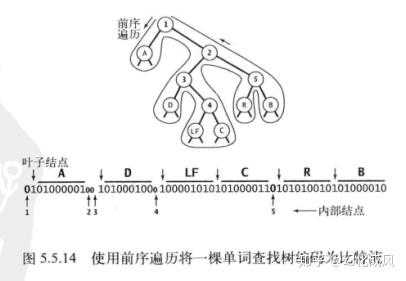

I have a doubt that binary files are the files which contains "0" and "1" only. We then apply the process again, on the new internal node and on the remaining nodes (i.e., we exclude the two leaf nodes), we repeat this process until only one node remains, which is the root of the Huffman tree.I have to calculate frequency of Huffman tree from a "binary file" as sole argument. The weight of the new node is set to the sum of the weight of the children. Then, the process takes the two nodes with smallest probability, and creates a new internal node having these two nodes as children. The process begins with the leaf nodes containing the probabilities of the symbol they represent.

HUFFMAN TREEE INPUT VECTOR FREQ OUTPUT VECTOR CODE CODE

A Huffman tree that omits unused symbols produces the most optimal code lengths. Formalized description Īlphabet A = ( a 1, a 2, …, a n ) internal nodes. Find A prefix-free binary code (a set of codewords) with minimum expected codeword length (equivalently, a tree with minimum weighted path length from the root). Huffman coding is such a widespread method for creating prefix codes that the term "Huffman code" is widely used as a synonym for "prefix code" even when such a code is not produced by Huffman's algorithm.Ĭonstructing a Huffman Tree Informal description Given A set of symbols and their weights (usually proportional to probabilities). Huffman coding uses a specific method for choosing the representation for each symbol, resulting in a prefix code (sometimes called "prefix-free codes", that is, the bit string representing some particular symbol is never a prefix of the bit string representing any other symbol). Building the tree from the bottom up guaranteed optimality, unlike the top-down approach of Shannon–Fano coding. In doing so, Huffman outdid Fano, who had worked with Claude Shannon to develop a similar code. Huffman, unable to prove any codes were the most efficient, was about to give up and start studying for the final when he hit upon the idea of using a frequency-sorted binary tree and quickly proved this method the most efficient. Fano, assigned a term paper on the problem of finding the most efficient binary code. Huffman and his MIT information theory classmates were given the choice of a term paper or a final exam.

However, although optimal among methods encoding symbols separately, Huffman coding is not always optimal among all compression methods - it is replaced with arithmetic coding or asymmetric numeral systems if a better compression ratio is required. Huffman's method can be efficiently implemented, finding a code in time linear to the number of input weights if these weights are sorted. As in other entropy encoding methods, more common symbols are generally represented using fewer bits than less common symbols. The algorithm derives this table from the estimated probability or frequency of occurrence ( weight) for each possible value of the source symbol.

The output from Huffman's algorithm can be viewed as a variable-length code table for encoding a source symbol (such as a character in a file). student at MIT, and published in the 1952 paper "A Method for the Construction of Minimum-Redundancy Codes". The process of finding or using such a code proceeds by means of Huffman coding, an algorithm developed by David A. In computer science and information theory, a Huffman code is a particular type of optimal prefix code that is commonly used for lossless data compression. (This assumes that the code tree structure is known to the decoder and thus does not need to be counted as part of the transmitted information.) Char Encoding the sentence with this code requires 135 (or 147) bits, as opposed to 288 (or 180) bits if 36 characters of 8 (or 5) bits were used. The frequencies and codes of each character are below. Huffman tree generated from the exact frequencies of the text "this is an example of a huffman tree".

0 kommentar(er)

0 kommentar(er)